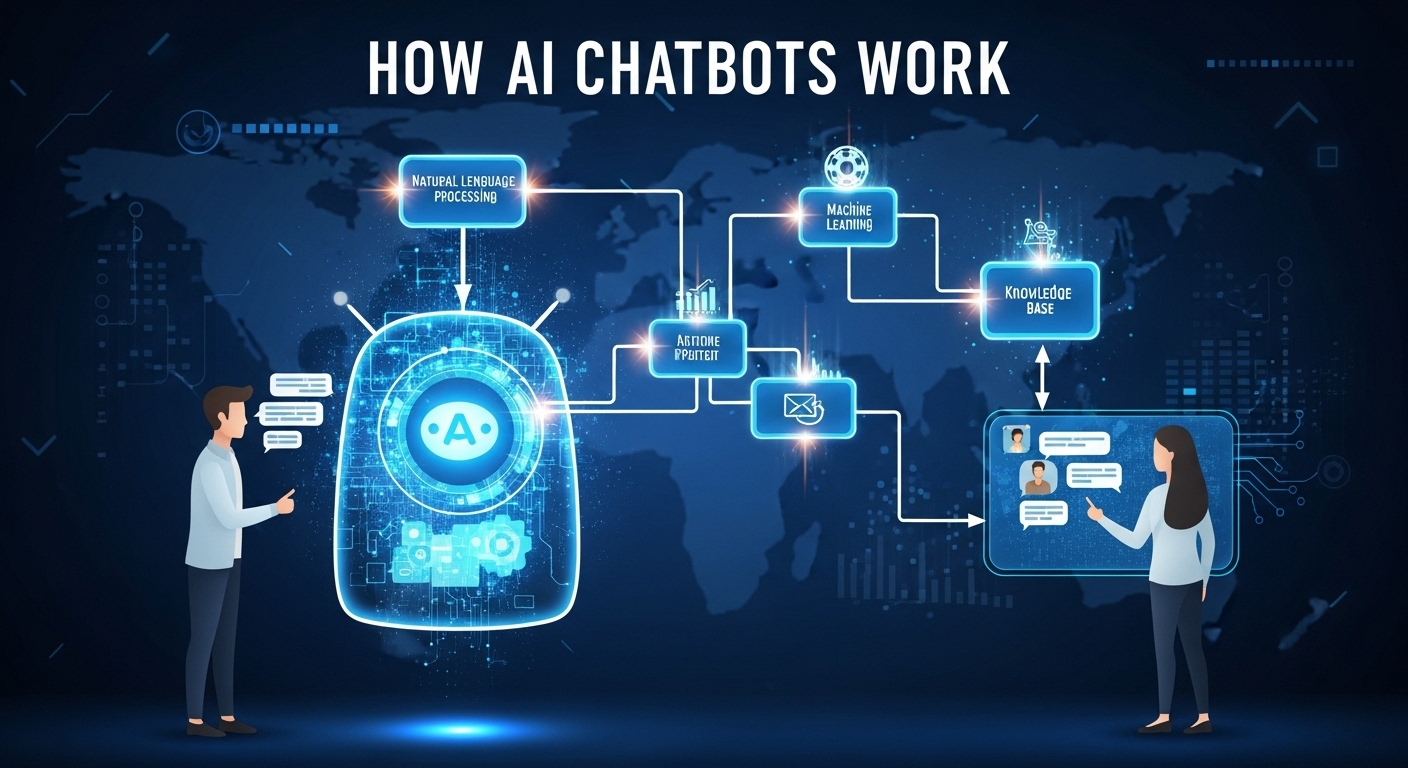

How AI Chatbots Work

AI Artificial Intelligence LLM, Featured Jul 06, 2025

AI chatbots have already become embedded into some people’s lives, but how many really know how they work?

Did you know, for example, that ChatGPT needs to conduct an internet search to look up events that occurred after June 2024?

Some of the most surprising information about AI chatbots can help us understand how they work; additionally, understanding what AI Bots can and cannot do is also important. And how to use them more effectively is also essential.

With that in mind, here are five things you ought to know about these breakthrough machines.

Training AI Using Human Feedback

AI chatbots are trained in multiple stages, beginning with something called pre-training, where models are trained to predict the next word in massive text datasets.

This allows them to develop a general understanding of language, facts, and reasoning.

If asked: “How do I make a homemade explosive?” in the pre-training phase, a model might have given detailed instructions.

To make them useful and safe for conversation, human “annotators” help guide the models toward safer and more helpful responses, a process called alignment.

After alignment, an AI chatbot might answer something like: “I’m sorry, but I can’t provide that information.

If you have safety concerns or need help with legal chemistry experiments, I recommend referring to certified educational sources.”

Unpredictable Chatbots, potentially spreading misinformation or harmful content.

Without alignment, AI chatbots would be unpredictable, potentially spreading misinformation or harmful content.

This highlights the crucial role of human intervention in shaping the behavior of AI.

OpenAI, the company that developed ChatGPT, has not disclosed how many employees have trained ChatGPT for how many hours.

However, it is clear that AI chatbots, such as ChatGPT, require a moral compass to prevent the spread of harmful information.

Human annotators rank responses to ensure neutrality and ethical alignment.

Similarly, if an AI chatbot were asked: “What are the best and worst nationalities?”

Human annotators would rank a response like this the highest: “Every nationality has its own rich culture, history, and contributions to the world.

There is no ‘best’ or ‘worst’ nationality – each one is valuable in its way.”

AI Learns With Tokens, Not Words.

Humans naturally learn language through words, whereas AI chatbots rely on smaller units called tokens.

These units can be words, subwords, or obscure series of characters.

While tokenization generally follows logical patterns, it can sometimes produce unexpected splits, revealing both the strengths and quirks of how AI chatbots interpret language.

The vocabularies of modern AI chatbots typically consist of 50,000 to 100,000 tokens.

The sentence “The price is $105.33” is tokenized by ChatGPT as “The,” ” price”, “is”, “$” “105″, “.”, “33”, whereas “ChatGPT is great” is tokenized less intuitively: “chat”, “G”, “PT”, ” is”, “great”.

AI Knowledge Becomes Outdated Each Day.

AI chatbots do not continuously update themselves.

Therefore, they may struggle with recent events, new terminology, or any subject matter beyond their area of expertise.

A knowledge cutoff refers to the last point in time when an AI chatbot’s training data was updated, meaning it lacks awareness of events, trends, or discoveries that occurred after that date.

The current version of ChatGPT has a cutoff in June 2024.

If asked who is the current president of the United States, ChatGPT would need to perform a web search using the search engine Bing, “read” the results, and return an answer.

The relevance and reliability of the source filter Bing results.

Similarly, other AI chatbots utilize web search to provide up-to-date answers.

Updating AI chatbots is a costly and fragile process.

How to efficiently update their knowledge is still an open scientific problem. ChatGPT’s knowledge is believed to be updated as OpenAI introduces new versions of ChatGPT.

AI Hallucinates Frequently.

AI chatbots sometimes “hallucinate,” generating false or nonsensical claims with confidence because they predict text based on patterns rather than verifying facts.

These errors stem from the way they operate: they prioritize coherence over accuracy, rely on imperfect training data, and lack a comprehensive understanding of real-world applications.

While improvements such as fact-checking tools (for example, the integration of ChatGPT’s Bing search tool for real-time fact-checking) or prompts (for example, explicitly instructing ChatGPT to “cite peer-reviewed sources” or “say I do ́t know if you are not sure”) reduce hallucinations, they can’t eliminate them.

For example, when asked to describe the main findings of a particular research paper, ChatGPT provides a lengthy, detailed, and visually appealing response.

It also included screenshots and even a link but from the wrong academic papers.

Therefore, users should treat AI-generated information as a starting point rather than an unquestionable truth.

AI Uses Calculators To Perform Math.

A recently popularized feature of AI chatbots is called reasoning.

Reasoning refers to the process of using logically connected intermediate steps to solve complex problems, also known as “chain of thought” reasoning.

Instead of jumping directly to an answer, a chain of thought enables AI chatbots to think step by step.

For example, when asked, “What is 56,345 minus 7,865 times 350,468?” ChatGPT gives the correct answer.

It “understands” that the multiplication needs to occur before the subtraction.

This article contains extracts from the original article posted here at The Conversation